Sai Sriskandarajah, Matt Chmiel, Teresa Porter and I created the Human Empowered, Pixel Optimized Camera Rendering Asynchronous Process for the Physical Computing without Computers course at ITP. This project recreates the digital camera rendering process, but instead of using microprocessors, human beings are enlisted to encode, digitize and render the image.

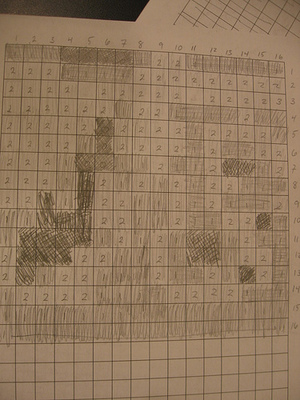

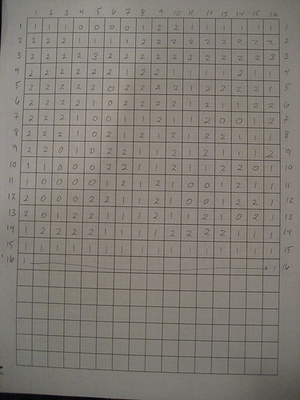

The first step in the process was to divide the image into individual pixels. We discussed and experimented with several possibilities, including dead reckoning, a latticework direct imaging grid and windowpane method. Finally we settled on using a medium-format camera’s focusing screen, with a grid drawn on it so that it would be easy to distinguish where each pixel was, and comfortably read off values. Next we experimented with pixel size and bit depth. A 8 x 8 grid produced too low resolution for an image to be interesting. We then attempted a 16 x 16 grid which too longer to read off, but created a reasonably recognizable portrait image.

Filled imaging grid

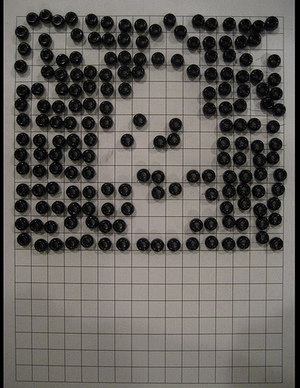

Simple black and white pixels at low resolution created an unsatisfying image. Two bit (black, dark, light and white) pixels gave much better results, and using three values instead of four seemed just as good to us, but much faster to determine. We went with using three values: black, gray and white. Various output formats were considered including marbles, sand, rubber stamps, inked paper, shaded boxes and beads.

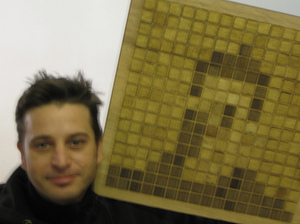

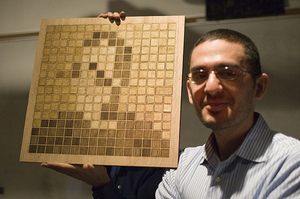

After examining several different methods, we decided that none were satisfying to us. Therefore we decided to try using the laser cutter to create a tiled grid of wooden pieces. A bit of layout, one long cold walk to the lumberyard and three trips between the bandsaw and Advanced Media Services resulted in a Rendering System.

We weren’t satisfied with the gray value so it was back to the store for watercolors and then again because we forgot to get a brush. Painting the gray tiles darker worked out well. It was time to do some portraits.

Our rendering system resulted in interesting images. Using humans meant that even though resolution was quite low, the brain’s edge detection, object recognition and foreground vs. background differentiation systems were incorporated. This made the image easier to recognize, yet still quite interesting to look at. The entire project was surprisingly enjoyable because rather than dealing with code issues or significant mechanical challenges, we got to interact with people and create something attractive as a final product. Also, because we weren’t focused on a particular artistic philosophy or technical agenda, the group found it easy to make decisions and work in tandem towards what surprised us all with rewarding results.

(photos by Sai Sriskandarajah, Rob Faludi and Matt Chmiel)